Title

Topic

-

Patent granted for engineered bacteria ‘secreting therapeutic proteins’

“Engineered bacteria that secrete therapeutic polypeptides, pharmaceutical compositions comprising the bacteria, methods for producing recombinant polypeptides, and methods for using the bacteria for diagnostic and therapeutic purposes are provided,” the abstract reads.

-

Taylor receives Harold Osher Award for research in sleep problems for children with autism

“Taylor, an emerging researcher in the field of mental and behavioral health, was presented with the Harold Osher Award for Excellence in Clinical and Population Health at the annual Lambrew Research Retreat sponsored by the MaineHealth Research Institute on May 1st, 2024. One hundred and thirty abstracts were submitted for the award, and after a blind panel review, Taylor’s abstract, ‘Sleep Problems in Children with Autism at the Time of Psychiatric Hospitalization in Relation to Parental Stress and Self-Efficacy,’ was awarded first place in the category of clinical and population health research.”

-

Wesley wins 2023 Fox International Case Writing Competition

In a post on LinkedIn, David Wesley wrote that he was “honored to be the winner of the 2023 Fox International Case Writing Competition with the case study, ‘Anheuser-Busch and the Anti-Transgender Boycott of Bud Light.'” Wesley performed this research “to show how Bud Light, as the leading beer brand in America, had to deal with a crisis situation that resulted from its campaign with a transgender influencer, Dylan Mulvaney.” Wesley continued, “The case highlights the duty of care that businesses have to their stakeholders, especially in times of crisis.”

-

‘Evolve or Dissolve: Shaping Your Corporate Culture for a Remote Reality’

As remote work has become more prevalent, distinguished professor Paula Caligiuri identifies a significant challenge now facing corporate cultures, and she provides five strategies to shape this culture: emphasize regular communication and visibility; foster connection and collaboration; reinforce and adapt company values; use myGiide to socialize employees; and recognize and reward cultural contributions.

-

Zheng receives funding for ‘innovative’ hybrid fuel cells

“Mechanical and industrial engineering associate professor Yi Zheng received a research grant of $208,957 to work on a three-year project, ‘Innovative Hybrid PEM Hydrogen Fuel Cell,’ from THETA LLC of Fall River, Massachusetts. This project will study the biomimetic hybrid hydrogen fuel cell as a practical alternative, addressing key barriers to widespread adoption. This involves developing non-platinum (Pt)-based gas diffusion layer/carbon electrodes, implementing enzymatic catalysts, and constructing a hybrid fuel cell to achieve high current and power densities by increasing the volumetric loading and conductivity of the hybrid biocatalyst.”

-

Oakes named to ASME top 25 Watch List

The “American Society of Mechanical Engineers recognized bioengineering associate professor Jessica Oakes on the Watch List of top 25 early career professionals. Her ASME magazine profile highlighted her work ‘What Happens When We Inhale Things?’ with applications from wildfire smoke to e-cigarettes.”

-

Zhu receives ECS Toyota Young Investigator Fellowship for research on sustainable batteries

“Mechanical and industrial engineering assistant professor Juner Zhu is one of only three individuals to receive an Electrochemical Society Toyota Young Investigator Fellowship this year. He will conduct research to assess the condition of batteries in electric vehicles using mechano-electrochemical techniques that will identify a battery’s physical changes to determine its overall health.”

-

Horsley receives NSF grant toward ‘catalyzing the formation and success of small business’

“Electrical and computer engineering professor and deputy director of the Institute for NanoSystems Innovation David Horsley, in collaboration with Innovation Impact International, was awarded a $299,898 NSF EAGER grant for ‘Catalyzing Deep Tech Innovation and Entrepreneurship via International Partnerships.’ This project has the potential to enhance the impact of NSF-sponsored research by catalyzing the formation and success of small business concerns founded by the NSF Small Business Innovation Research and Small Business Technology Transfer (SBIR and STTR) programs.”

-

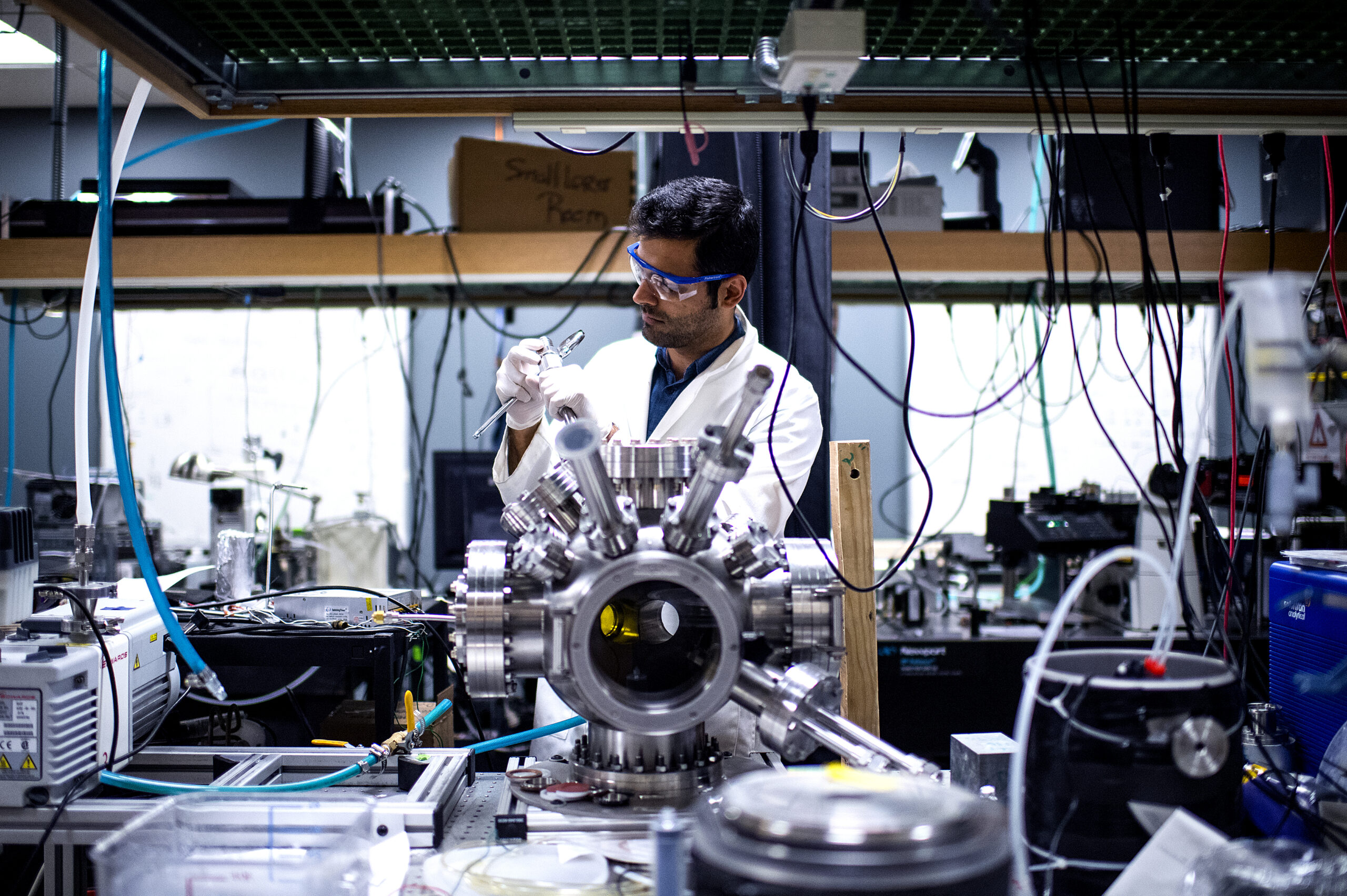

‘Operando Raman Spectroscopy Reveals Degradation Byproducts From Ionomer Oxidation in Anion Exchange Membrane Water Electrolyzers’

“This work showcases the discovery of degradation mechanisms for nonplatinum group metal catalyst (PGM free) based anion exchange membrane water electrolyzers (AEMWE) that utilize hydroxide ion conductive polymer ionomers and membranes in a zero gap configuration. An entirely unique and customized test cell was designed from the ground up for the purposes of obtaining Raman spectra during potentiostatic operation. These results represent some of the first operando Raman spectroscopy explorations into the breakdown products that are generated from high oxidative potential conditions with carbonate electrolytes.” Find the paper and full list of authors in Journal of the American Chemical Society.

-

‘Non-Volatile Magnon Transport in a Single Domain Multiferroic’

“Antiferromagnets have attracted significant attention in the field of magnonics, as promising candidates for ultralow-energy carriers for information transfer for future computing. … In multiferroics such as BiFeO3 the coupling between antiferromagnetic and polar order imposes yet another boundary condition on spin transport. Thus, understanding the fundamentals of spin transport in such systems requires a single domain, a single crystal. We show that through Lanthanum (La) substitution, a single ferroelectric domain can be engineered with a stable, single-variant spin cycloid, controllable by an electric field.” Find the paper and full list of authors in Nature Communications.

-

‘Psychophysics of Neon Color Spreading: Chromatic and Temporal Factors Are not Limiting’

“Neon color spreading (NCS) is an illusory color phenomenon that provides a dramatic example of surface completion and filling-in. Numerous studies have varied both spatial and temporal aspects of the neon-generating stimulus to explore variations in the strength of the effect. Here, we take a novel, parametric, low-level psychophysical approach to studying NCS. … There is no evidence in this study that the processes underlying NCS are slower than the low-level processes of simple flicker detection. These results point to relatively fast mechanisms, not slow diffusion processes, as the substrate for NCS.” Find the paper and authors list in Vision…

-

‘Confinement of Excited States in Two-Dimensional, In-Plane, Quantum Heterostructures’

“Two-dimensional (2D) semiconductors are promising candidates for optoelectronic application and quantum information processes due to their inherent out-of-plane 2D confinement. In addition, they offer the possibility of achieving low-dimensional in-plane exciton confinement, similar to zero-dimensional quantum dots, with intriguing optical and electronic properties via strain or composition engineering. … Here, we report the observation of lateral confinement of excitons in epitaxially grown in-plane MoSe2 quantum dots (~15-60 nm wide) inside a continuous matrix of WSe2 monolayer film via a sequential epitaxial growth process.” Find the paper and full list of authors in Nature Communications.

-

‘Red Light-Activated Depletion of Drug-Refractory Glioblastoma Stem Cells and Chemosensitization of an Acquired-Resistant Mesenchymal Phenotype’

“Glioblastoma stem cells (GSCs) are potent tumor initiators resistant to radiochemotherapy, and this subpopulation is hypothesized to re-populate the tumor milieu due to selection following conventional therapies. Here, we show that 5-aminolevulinic acid (ALA) treatment— a pro-fluorophore used for fluorescence-guided cancer surgery—leads to elevated levels of fluorophore conversion in patient-derived GSC cultures, and subsequent red light-activation induces apoptosis in both intrinsically temozolomide chemotherapy-sensitive and -resistant GSC phenotypes.” Find the paper and full list of authors in Photochemistry and Photobiology.

-

Understanding a tragedy: Miller reviews ‘A Day in the Life of Abed Salama’

Professor of law and international affairs Zinaida Miller reviews Nathan Thrall’s “A Day in the Life of Abed Salama: Anatomy of a Jerusalem Tragedy,” for Just Security. The book analyzes the origins of a tragic school bus accident in 2012 — “the accident could be labeled accidental in only a literal and immediate sense: no one intended, planned, or desired it,” Miller writes. “And yet, the conditions that made a rainy day deadly were far from accidental.” “A Day in the Life of Abed Salama” examines the various structural forces that at play that contributed to such an accident.

-

‘Strategic Behavior of Large Language Models and the Role of Game Structure Versus Contextual Framing’

“This paper investigates the strategic behavior of large language models (LLMs) across various game-theoretic settings, scrutinizing the interplay between game structure and contextual framing in decision-making. We focus our analysis on three advanced LLMs—GPT-3.5, GPT-4, and LLaMa-2—and how they navigate both the intrinsic aspects of different games and the nuances of their surrounding contexts. Our results highlight discernible patterns in each model’s strategic approach. GPT-3.5 shows significant sensitivity to context but lags in its capacity for abstract strategic decision making.” Find the paper and full list of authors in Nature: Scientific Reports.

-

‘SepsisLab: Early Sepsis Prediction With Uncertainty Quantification and Active Sensing’

“Sepsis is the leading cause of in-hospital mortality in the USA. Early sepsis onset prediction and diagnosis could significantly improve the survival of sepsis patients. Existing predictive models are usually trained on high-quality data with few missing information, while missing values widely exist in real-world clinical scenarios. … The uncertainty of imputation results can be propagated to the sepsis prediction outputs, which have not been studied in existing works on either sepsis prediction or uncertainty quantification.” Find the paper and full list of authors in the proceedings of Knowledge Discovery and Data Mining 2024.

-

‘Fidelity of the Kitaev Honeycomb Model Under a Quench’

“We theoretically study the influence of quenched outside disturbances in an intermediately long-time limit. We consider localized imperfections, uniform fields, noise, and couplings to an environment within a unified framework using a prototypical but idealized interacting quantum device—the Kitaev honeycomb model. As a measure of stability we study the Uhlmann fidelity of quantum states after a quench. … Our work provides estimates for the intermediate long-time stability of a quantum device, offering engineering guidelines for quantum devices in quench design and system size.” Find the paper and full list of authors in Physical Review B.

-

‘Self-Help Groups and Opioid Use Disorder Treatment: An Investigation Using a Machine Learning-Assisted Robust Causal Inference Framework’

“This study investigates the impact of participation in self-help groups on treatment completion among individuals undergoing medication for opioid use disorder (MOUD) treatment. Given the suboptimal adherence and retention rates for MOUD, this research seeks to examine the association between treatment completion and patient-level factors. Specifically, we evaluated the causal relationship between self-help group participation and treatment completion for patients undergoing MOUD.” Find the paper and full list of authors in the International Journal of Medical Informatics.

-

Santillana added to Atlas of Inspiring Hispanic/Latinx Scientists

Mauricio Santillana, a professor of both physics and electrical and computer engineering at Northeastern University, has been added to the Atlas of Inspiring Hispanic/Latinx Scientists, hosted by the Fred Hutch Cancer Center. The atlas is described as “a grassroots effort developed to showcase the expertise, talents, and diversity of Hispanic and Latinx scientific faculty.” Santillana’s research focuses on modeling complex events — like disease outbreaks— through machine learning and network science.

-

‘Cosmologically Consistent Analysis of Gravitational Waves From Hidden Sectors’

“Production of gravitational waves in the early Universe is discussed in a cosmologically consistent analysis within a first-order phase transition involving a hidden sector feebly coupled with the visible sector. Each sector resides in its own heat bath leading to a potential dependent on two temperatures and on two fields: one a standard model Higgs field and the other a scalar arising from a hidden sector 𝑈(1) gauge theory. A synchronous evolution of the hidden and visible sector temperatures is carried out from the reheat temperature down to the electroweak scale.” Find the paper and authors list in Physical Review D.

-

Discovering the ‘Fundamentals of IoT Communication Technologies’

Rolando Herrero, program director of telecommunication networks and cyber-physical systems at Northeastern University, “presents a comprehensive resource of the Internet of Things and its networking and protocols, intended for classroom use,” according to the publisher’s webpage. The textbook, titled “Fundamentals of IoT Communication Technologies,” is based on a “popular class” that Herrero teaches, and the book includes examples, slides and “a ‘hands-on’ section where the topics discussed as theoretical content are built as stacks in the context of an IoT network emulator.”

-

‘Native Capillary Electrophoresis–Mass Spectrometry of Near 1 MDa Non-Covalent GroEL/GroES/Substrate Protein Complexes’

“Protein complexes are essential for proteins’ folding and biological function. Currently, native analysis of large multimeric protein complexes remains challenging. Structural biology techniques are time-consuming and often cannot monitor the proteins’ dynamics in solution. Here, a capillary electrophoresis-mass spectrometry (CE–MS) method is reported to characterize, under near-physiological conditions, the conformational rearrangements of ∽1 MDa GroEL upon complexation with binding partners involved in a protein folding cycle. … This study shows the CE–MS potential to provide information on binding stoichiometry and kinetics for various protein complexes.” Find the paper and full list of authors in Advanced Science.

-

‘Prevalence and Correlates of Irritability Among U.S. Adults’

“This study aimed to characterize the prevalence of irritability among U.S. adults, and the extent to which it co-occurs with major depressive and anxious symptoms. A non-probability internet survey of individuals 18 and older in 50 U.S. states and the District of Columbia was conducted between November 2, 2023, and January 8, 2024. … In linear regression models, irritability was greater among respondents who were female, younger, had lower levels of education and lower household income. Greater irritability was associated with likelihood of thoughts of suicide in logistic regression models adjusted for sociodemographic features.” Find the paper and authors list…

-

‘Sophisticated Natural Products as Antibiotics’

“In this Review, we explore natural product antibiotics that do more than simply inhibit an active site of an essential enzyme. We review these compounds to provide inspiration for the design of much-needed new antibacterial agents. … Many of the compounds exhibit more than one notable feature, such as resistance evasion and target corruption. Understanding the surprising complexity of the best antimicrobial compounds provides a roadmap for developing novel compounds to address the antimicrobial resistance crisis by mining for new natural products and inspiring us to design similarly sophisticated antibiotics.” Find the paper and full list of authors in Nature.

-

‘Changes in Cerebral Vascular Reactivity Following Mild Repetitive Head Injury in Awake Rats: Modeling the Human Experience’

“The changes in brain function in response to mild head injury are usually subtle and go undetected. Physiological biomarkers would aid in the early diagnosis of mild head injury. In this study we used hypercapnia to follow changes in cerebral vascular reactivity after repetitive mild head injury. … The changes in vascular reactivity were not uniform across the brain. The prefrontal cortex, somatosensory cortex and basal ganglia showed the hypothesized decrease in vascular reactivity while the cerebellum, thalamus, brainstem, and olfactory system showed an increase in BOLD signal to hypercapnia.”Find the paper and list of authors in Experimental Brain Research.

-

‘Strategies to Optimize the Deployment of Roadway Maintenance Machines for Overnight Maintenance in Urban Rail Systems’

“This research investigates the effectiveness of several strategies to deploy roadway maintenance machines (RMMs) in preparation for overnight maintenance in rapid transit systems. Owing to the short windows of time available for maintenance activities in the overnight period (i.e., when revenue service is suspended), efficient deployment of RMMs is an important aspect of ensuring adequate productive time for crews at work locations. Four deployment strategies are investigated.” Find the paper and full list of authors in Transportation Research Record.

-

Caracoglia to model new wind turbine designs

Luca Caracoglia, professor of civil and environmental engineering, has received NSF funding for a project titled, “Modeling the Influence of Turbulence on Flow-Induced Instabilities of Large Flexible Structures With Innovative Applications in Wind Turbine Blades.” Caracoglia will be designing stochastic models to “promote safe design of next-generation offshore wind turbine structures by enabling slender and lighter blade designs,” the abstract states.