An in-home assistant recognizes that an elderly person needs assistance. A student controls their computer by waving their hands through the air. A video gamer interacts with a virtual environment.

All without touching a mouse, keyboard or other piece of hardware and without speaking to any device.

Some of these applications may sound like science fiction, but with radical new work in computer vision and machine learning being performed by associate professor of electrical and computer engineering Sarah Ostadabbas, along with Ph.D. students Le Jiang and Zhouping Wang, these technologies are tantalizingly close.

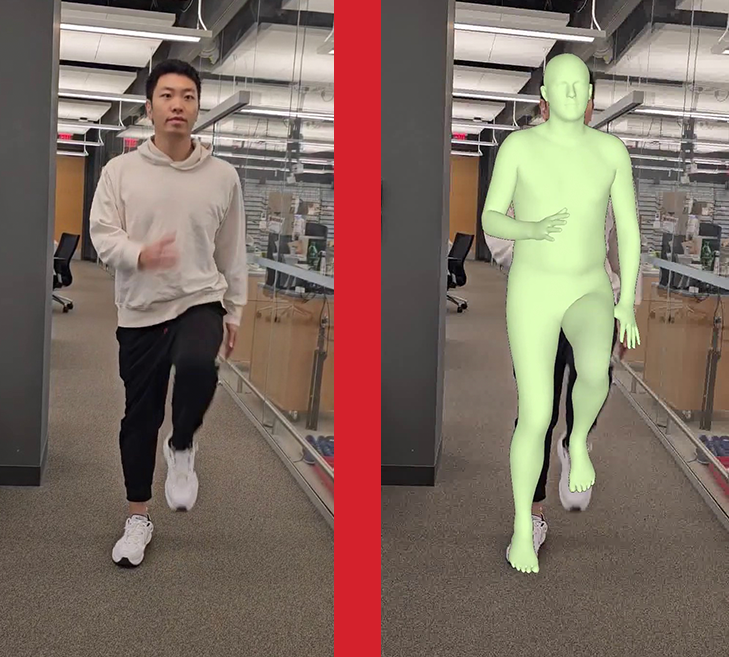

Ostadabbas and her team, operating within her Augmented Cognition Laboratory, are designing an algorithm focused on “human pose and shape estimation.” That is, an algorithm that can identify human-shaped pixels from a video source and then apply a “3D mesh” to that region of the video.

From the flat, two-dimensional image of a single video source, their algorithm can differentiate between the body parts of a human in the scene and extrapolate a three-dimensional model that tracks — and predicts — the pose and shape of human subjects.

Think of the 3D mesh like an artist’s mannequin, articulated at the joints. “You can model the human body with [either] high granularity or low granularity,” Ostadabbas says.

Previous computer vision models have utilized either one or the other. High granularity models focus on large joints, like the shoulders and hips, while low granularity models focus on finer joints, like the bones of the hand, Ostadabbas explains.

Their algorithm will cover both levels of granularity.

“We have added an inverse kinematics model,” says Wang, which optimizes the program’s approximation of a human skeleton and increases its predictive accuracy.

But in addition to this sharper vision of joint granularity, the algorithm designed by Ostadabbas, Jiang and Wang will perform its calculations — it will “see” its human subject — in real time.

“We ensure temporal consistency,” Jiang says, through the algorithm’s ability to estimate future information — where the 3D model will go — by looking at previous frames in the video stream — where the model was. They call this process “sequential data loading.”

If a computer vision algorithm has access to a full video — one that has a beginning and an end — it’s much easier for that program to determine the movement of its three-dimensional model, by looking forward and backward in time to “learn” the mesh’s movement. Not so for the program of Ostadabbas, Jiang and Wang.

“We are assuming that whatever we are extracting only depends on the current frame that we have and the previous frame,” Ostadabbas says. As in the real-world, practical scenarios they are designing for, “We don’t have access to the future frames.”

Their algorithm will be able to run off of livestreamed footage, even using its estimative abilities to fill in the gaps around dropped frames in the video that might be caused by network issues or memory shortage.

This makes real-time, real-world use cases in industries like health care possible, where live alerting about a patient in distress could mean the difference between life and death.

Because of how it predicts movement from one frame to the next, the algorithm can even make estimations about parts of the body that are occluded in the 2D video.

In other words, the algorithm estimates the location (and relative movement) of a participant’s limb even if that limb isn’t visible to the camera, whether it’s moved out of the camera’s field of view or behind a piece of furniture.

Indoor locations “might have lots of obstacles,” Jiang says, but “our method has temporal coherence, which means that it won’t introduce a large influence on our output if the body is suddenly obscured.”

This continuity increases the entire program’s functionality and stability. “We can have a model that is more realistic,” Wang says, “and can solve more problems.”

Ostadabbas recently received a Sony Faculty Innovation Award for this project, initiating a collaboration between her team and Sony team members.

Jiang notes that Sony is one of the frontrunners in the virtual reality industry, having brought the PlayStation VR headset to the video game market. But he also foresees applications in military training, “training in realistic simulated environments,” as well as for new possibilities in the gaming world.

Ostadabbas is the first Northeastern University faculty member to receive the award, which comes with a $100,000 grant.

But what is more exciting, she says, “is the collaboration with Sony. We already have a Slack group set up with them and are meeting with their engineers regularly.”

Ostadabbas sees this as the ideal way of collaborating “between academia and industry,” she says. “We have the technological and algorithmic advancement that we can bring to the picture, and they have the problem formulation, application-specific data, and the need and constraint of the problem.”

“They are coming to us and we are adapting our model to their needs,” she says.

Noah Lloyd is the assistant editor for research at Northeastern Global News and NGN Research. Email him at n.lloyd@northeastern.edu. Follow him on X/Twitter at @noahghola.