The case against killer robots

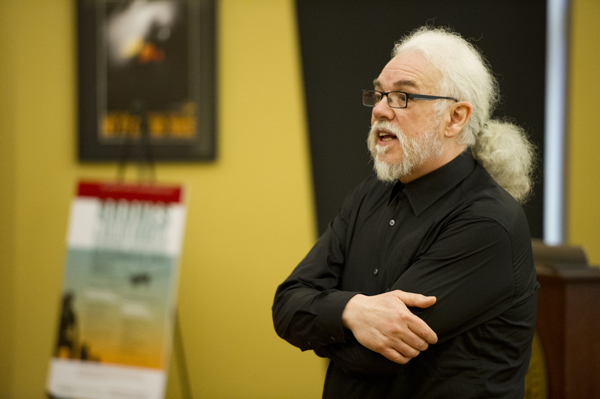

Noel Sharkey, the chairman of the International Committee for Robot Arms Control, argued his case against killer robots last Friday at Northeastern University, saying that autonomous machines should not be allowed to make the decision to kill people on the battlefield.

“We’re on a course toward fully automating warfare,” Sharkey warned in a two-hour lecture on the political, ethical, and legal implications of robotic weapons. “Who in his right mind would automate the decision to kill?”

Later in the day, Sharkey moderated a panel discussion of drones and killer robots. The panelists comprised Max Abrahms, an assistant professor of political science at Northeastern; Denise Garcia, a member of ICRAC and an associate professor of political science and international affairs at Northeastern; and Patrick B. Johnson, a political scientist at the RAND Corporation, a nonprofit global policy think tank.

The two-part event—the second in a new series titled “Controversial Issues in Security Studies”—was sponsored by the Northeastern Humanities Center and the Department of Political Science. Garcia organized the program with the support of Gerard Loporto, LA’73, and his family.

Sharkey, for his part, is a preeminent expert in robotics and artificial intelligence. As a spokesperson for the Campaign to Stop Killer Robots, he traveled to Geneva earlier this month to convince the United Nations’ Convention on Conventional Weapons to ban killer robots before they’re developed for use on the battlefield. Fully autonomous weapons, which do not yet exist, would have the ability to select and then destroy military targets without human intervention.

The rise of the machines is a hot-button issue in Washington. In response to criticism of the administration’s use of combat drones, President Obama delivered a speech at the National Defense University in May, promising that the U.S. would only use drones against a “continuing and imminent threat against the American people.

“The terrorists we are after target civilians and the death toll from their acts of terrorism against Muslims dwarfs any estimate of civilian casualties from drone strikes,” he added. “So doing nothing is not an option.”

In his lecture last Friday, Sharkey laid out his argument against the autonomous Terminator-like weapons. He began by noting that their use could violate at least two principles of international humanitarian law—the principle of distinction, which posits that battlefield weapons must be able to distinguish between combatants and civilians; and the principle of proportionality, which posits that attacks on military objects must not cause excessive loss of civilian life in relation to the foreseeable military advantage.

Of the principle of proportionality, he said, “You can kill civilians provided it’s proportional to direct military advantage, but that requires an awful lot of thinking and careful years of planning. We must not let robots do that under any circumstance.”

Sharkey also censured the CIA’s use of the nation’s current fleet of combat drones in countries with which the U.S. is not at war. “I would like to ask the CIA to stop killing civilians in the name of collateral damage,” Sharkey pleaded. “I really don’t like seeing children being killed, because there’s no excuse for that whatsoever.”

In his opening remarks, Stephen Flynn, the director of Northeastern’s Center for Resilience Studies, articulated the difficulties of rapidly assimilating new warfare technology. “Technology always outpaces our ability to sort out what the guidelines are,” he explained. “What could be tactically effective could also be strategically harmful.

“Issues of policy, technology, and morality are all in play, but they don’t lend themselves to slogans or bumper stickers,” he added. “We won’t have effective conversations unless we delve into these issues.”

In the Q-and-A session, more than a dozen students heeded Flynn’s advice by asking Sharkey several tough questions. The former president of the Northeastern College Democrats asked Sharkey what students could do to stop the development of killer robots, prompting Sharkey to encourage students to start a youth movement to raise awareness of their dangers.

Another student asked Sharkey whether automating warfare would decrease the human death toll. “I don’t mind protecting soldiers on the ground, but [the use of killer robots] might lead to more battles than you want to be in,” he explained. “If they’re increasing terrorism, then who are they really protecting?”