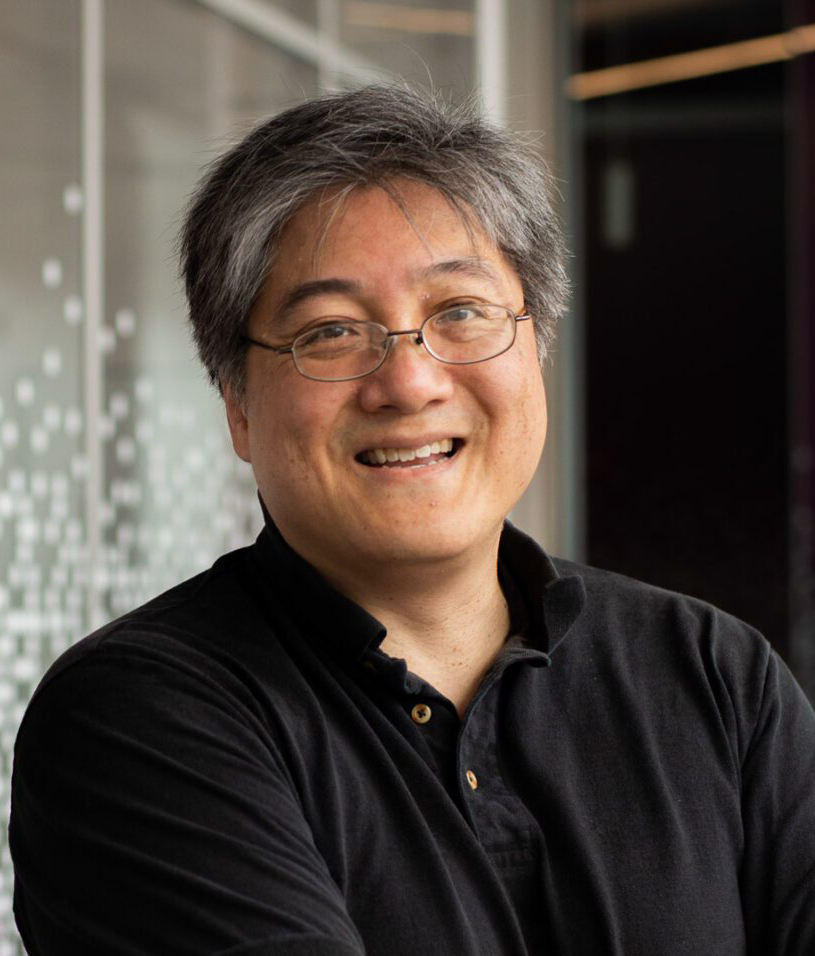

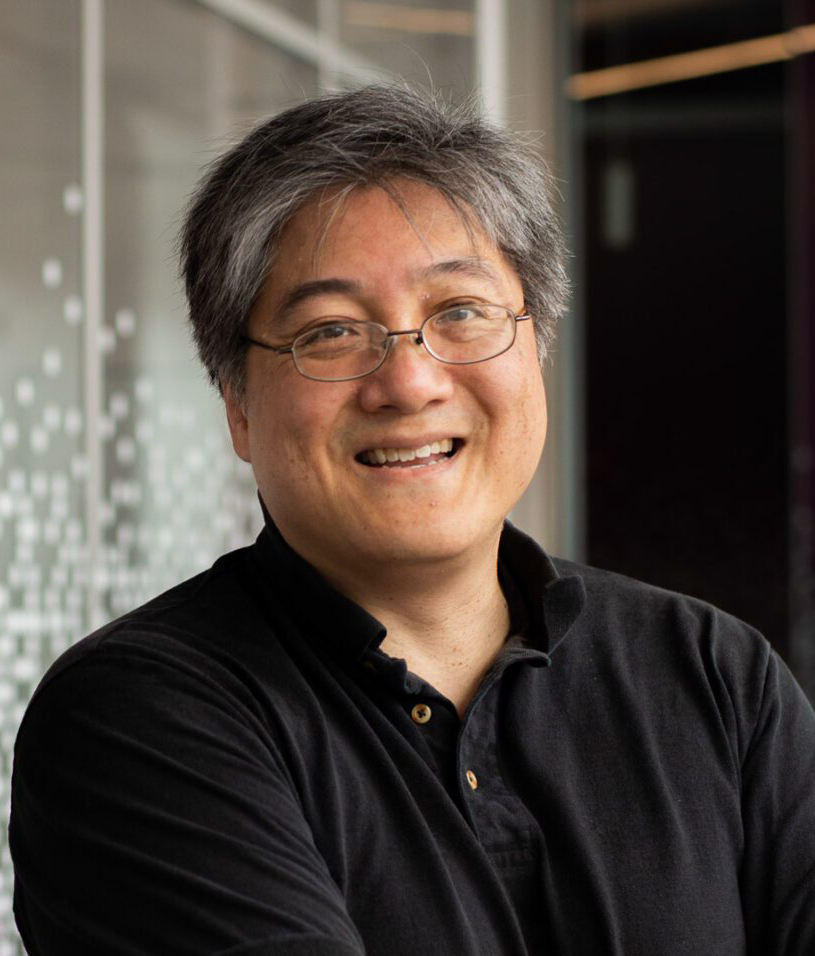

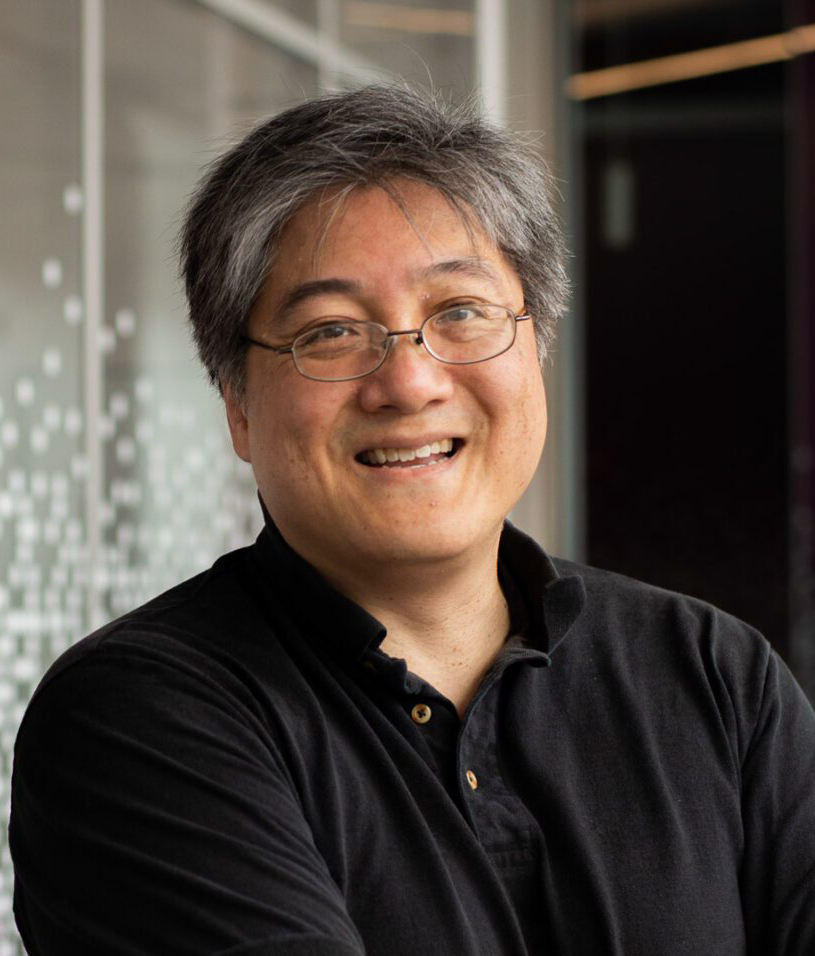

AI researcher David Bau, director of Northeastern University’s National Deep Inference Fabric, a project that aims to help researchers understand how large language models work, said these findings show how AI models could be vulnerable to data poisoning, allowing bad actors to more easily insert malicious traits into the models that they’re training.