An invasive plant is strangling Connecticut’s waters, so these students developed a robotic boat to help fix it

As a native of Connecticut and a boater, Colin McKissick is well aware of the invasive plant that is wreaking havoc in the state’s bodies of water.

Native to Australia, Africa and parts of Asia, the hydrilla plant found its way to Florida in the 1950s as it was used to bed aquariums since it doesn’t need much nutrition or light to grow.

Since then, hydrilla has been coined the “world’s worst invasive aquatic plant” as it spreads and grows rapidly and is difficult to control. The plant can now be found in many parts of the United States, but Connecticut has been hit particularly hard by the noxious weed.

A 2020 survey of the Connecticut River commissioned by the Connecticut River Gateway Commission found hydrilla in 200 acres in the river’s lower third. Its dense strands make it hard for native aquatic plants and marine life to thrive, and it often clogs boat propellers.

McKissick, a fifth-year Northeastern student, has experienced this firsthand while boating on the Connecticut River.

“Just going up on the river to get to the boat ports, a couple of times our propeller would get clogged up with the plant, which is wild because you wouldn’t expect a plant to gum up an 80-horsepower engine,” he says.

Enter the Hydrilla Hunter, an autonomous robotic boat outfitted with a hyperspectral camera designed to detect and identify the invasive plant.

McKissick helped develop the boat with a dozen other Northeastern engineering students as part of two capstone project classes.

The goal is to provide the boat to plant scientists at the Connecticut Agricultural Experiment Station to help them more quickly identify and survey where hydrilla can be found and stop it from growing further.

The project is a collaboration between the electrical and computer engineering department, the mechanical engineering department, the Robotics and Intelligent Vehicles Research Lab and the Connecticut Agricultural Experiment Station.

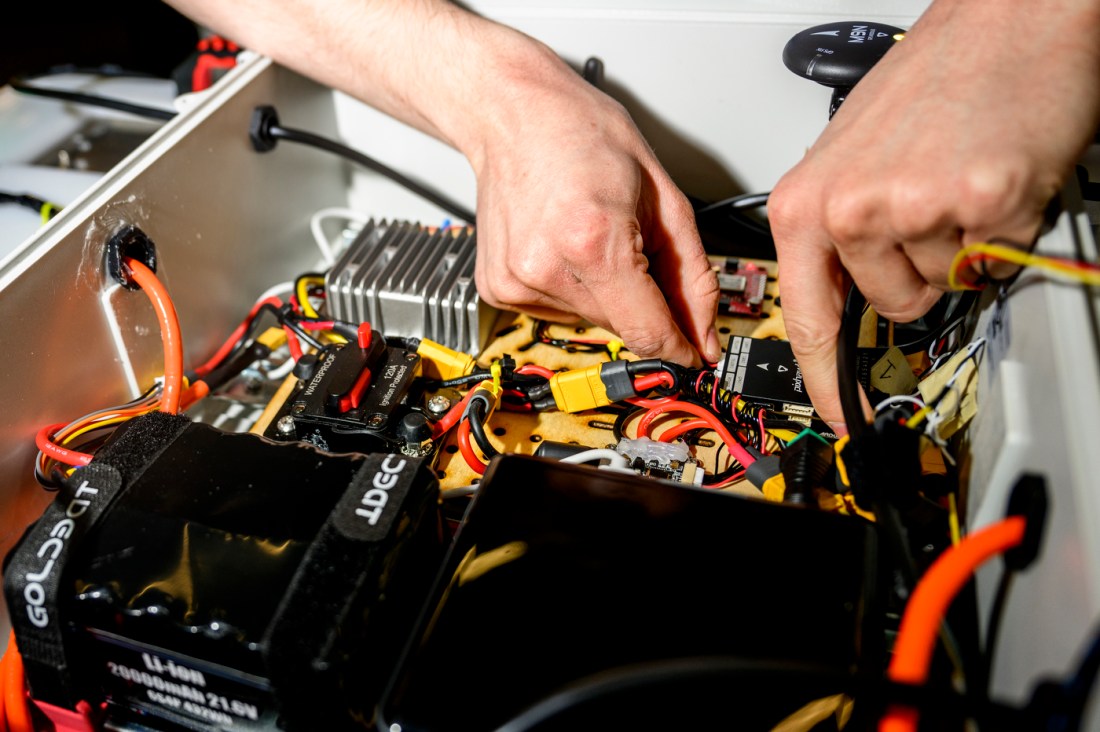

Students working under Charles DiMarzio, associate professor of electrical and computer engineering, created the internals of the device, which include the imaging system, renewable battery and communication systems.

Students working under professor Randall Erb, associate professor of mechanical and industrial engineering, developed the housing and the boat’s navigation system.

“We came up with a solution to tackle this, which is to automate the detection of the hydrilla and notify the scientists of its location to extract it before it takes over the Connecticut water bodies,” says McKissick, who worked on the electrical and computer engineering side of the project.

So, how does the boat work?

Think of it like a three-step process.

First, the user pinpoints where on the map it would like the robot to go using a homebase system separate from the robot. As it hits those pinpoints, the robot scans the surface below for hydrilla. If it detects the presence of hydrilla, the user interface adds a pin to the location where the plant was detected.

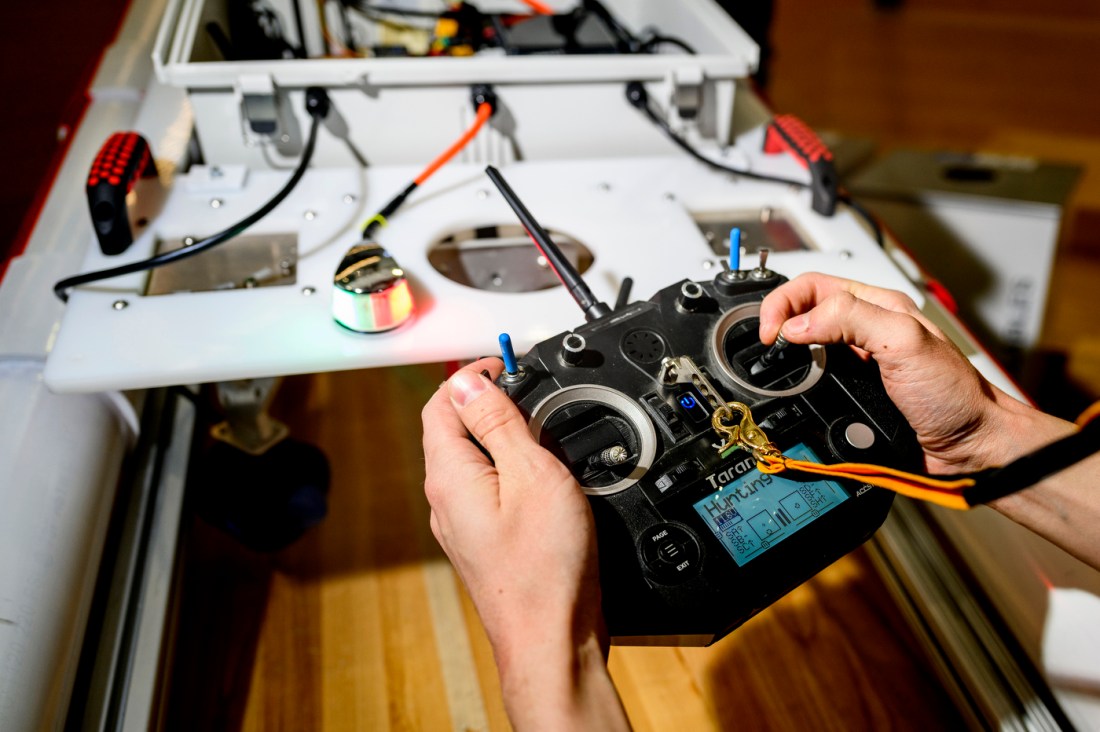

The robotic boat weighs 62 pounds, can travel at speeds of up to 1.3 miles per hour and can operate for 90 minutes on a charge. It can either be controlled remotely or operate autonomously, explains Daniel T. Simpson, a fourth-year student who worked on the mechanical engineering side of the project.

“I can manually control it and tell it to move forward, backward, and I can flip a switch and the robot’s software will say, ‘OK, let me look at the GPS waypoints I was told to go to, and let me start going through those points,’” he says.

Jessica Healey, a fourth-year student working in the mechanical engineering group, says the two teams worked closely together to develop the project.

“Throughout the semester, we would meet up monthly, sometimes more frequently depending on what was going on, and just touch base with each other,” she says.

Methods currently used to survey for the plant involve scientists on boats searching for several hours a week using heavy underwater cameras. Distinguishing the plant can also often be a challenge since it looks similar to native species.

That’s what makes the robot’s hyperspectral camera ideal for this kind of situation, explains Lisa Bryne, a fifth-year student who worked on the electrical and computer engineering side of the project. Hyperspectrical cameras work by capturing a range of wavelength greater than what the human eye can comprehend.

“These plants look incredibly similar, and the data in the infrared is really valuable to be able to distinguish the plants,” Bryne says.

The idea for the project was born out of discussions the students had with Taskin Padir, professor of electrical and computer engineering and head of the Robotics and Intelligent Vehicles Research Lab.

Through the lab, Padir had already drafted a National Science Foundation proposal with Jeremiah Foley, a plant scientist at the Connecticut Agricultural Experiment Station about using robotics to help solve the hydrilla problem.

“We’ve been thinking about this problem from an environmental robotics perspective for a while,” Padir says. “It’s a [relatively] unknown yet important problem.”

Foley has big plans for how he’ll like to use the system. Ideally, the station would like to hire a number of technicians to bring the robot to bodies of waters in Connecticut where fishermen typically fish as they tend to unintentionally carry pieces of hydrilla with them where they fish between bodies of water.

“Rather than getting out to a water body and having us drive around for hours on end, we can send a robot in and my technicians can do it,” he says. “I can stay back in the lab and collaborate with them.”

Solving these kinds of problems is central to the ethos of Northeastern’s Institute of Experiential Robotics, for which Padir is the director.

“We always talk about four pillars of experiential robotics, and one of them is experiential discovery,” Padir says. “That doesn’t happen in the lab. It happens outside, when we reach out to stakeholders, when we try to understand the problems that need to be solved. We usually don’t approach the problem by saying ‘Oh we have a robot here. Let’s solve your problem.’”

“What we do is try to understand the problem, what the bottlenecks are and come back to the lab to try and create a solution toward solving that problem,” he adds.

The students took Padir’s suggestion and ran with it, working directly with Foley to help develop a useful robotic tool.

“What’s cool about our project is that we actually had a stakeholder say, ‘Hey, we have this huge problem, can you help us engineer a solution.’ That’s where we came in,” says Arjun Fulp, a fourth-year student who was in the electrical engineering capstone group.

Cesareo Contreras is a Northeastern Global News reporter. Email him at c.contreras@northeastern.edu. Follow him on X/Twitter @cesareo_r and Threads @cesareor.