The five crucial ways LLMs can endanger your privacy

The power of large language models to collect and expose private, personal data goes far beyond the information that they train on or memorize, according to Northeastern research.

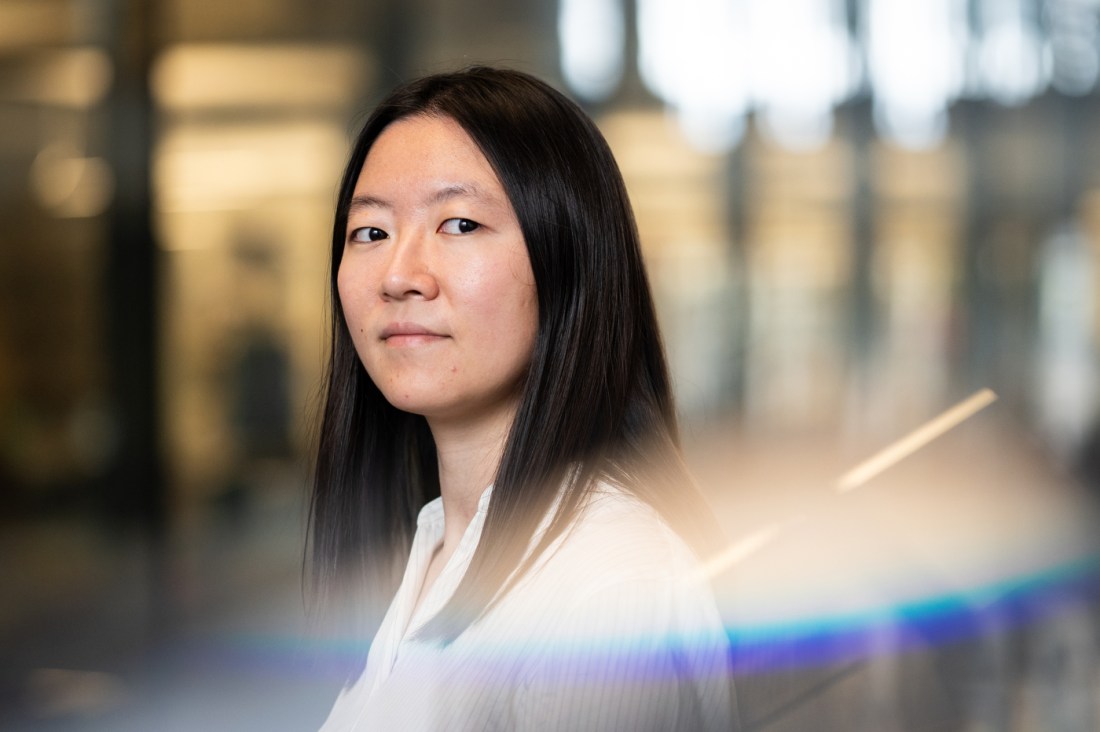

The privacy concerns around large language models like ChatGPT, Claude and Gemini are more serious than just the data the algorithms ingest, according to a Northeastern University computer science expert.

There are four other critical, understudied threats to privacy that artificial intelligence poses: uninformed consent in user agreements, autonomous AI tools that don’t understand privacy norms, deep inference that allows for the quick gathering of personal data and direct attribute aggregation, which democratizes surveillance capabilities, says Tianshi Li, a Northeastern University assistant professor of computer science.

In a literature review of over 1,300 computer science conference papers over the last decade that addressed privacy concerns with large language models, or LLMs, Li observed that 92% of them focused on issues of data memorization and leakage, radically underestimating data aggregation, deep inference and agentic AI.

LLMs can pop your personal bubble

Data memorization, Li says, is the process through which an LLM ingests a data set and encodes that information in its algorithms.

Not all data that an LLM reads is memorized, much of it is simply learned from and then discarded, like learning the grammar of a language, how the words go together, without learning the exact vocabulary. Memorized data is returned to repeatedly until it becomes baked into the system.

It is, however, incredibly hard to know which information has been memorized by the LLM, Li says, and crucially, “you don’t know how to erase it from the model’s memory if you want to delete it.”

Yet, Li says, there are at least four other ways that LLMs can create significant privacy concerns for users and nonusers alike.

One of those ways, uninformed consent, is familiar to many who use the internet on a regular basis, and includes complicated consent or opt-out forms that obscure what information a website collects. Each of the companies the researchers looked at had significant loopholes that allowed them to retain certain chats with their LLMs even after a user had opted out.

As so much data is retained, it is difficult to know exactly what data is or isn’t being stored long-term.

The concerns around data memorization and uninformed consent revolve around leakage: Information that the LLM has memorized could be exposed to someone who wasn’t supposed to see it. The other three concerns are more sinister.

Agents, inference and aggregation

The third privacy issue comes down to the increasingly agentic and autonomous capabilities large language models possess and continue acquiring at a rapid rate, Li says.

For instance, some users are now embedding LLMs in email accounts to write automatic replies. These tools have access to things like “proprietary data sources, or the entire open internet,” Li says.

Editor’s Picks

The problem is that LLMs don’t understand or respect privacy, Li notes, and so the collection and dissemination of personal data onto the wider internet, or the ingestion of personal data accidentally left on the internet, becomes a real possibility.

“There are also other cases that malicious users can weaponize these agentic AI capabilities, because they are capable of retrieving and analyzing and synthesizing information at a much faster speed than humans can,” she continues.

This speed benefits both the average user and the malicious hacker trying to gather personal information about a victim, and here’s the thing: LLMs don’t need the specifics.

This is the fourth concern, what Li calls deep inference. Because LLMs are so good at synthesizing and analyzing data so quickly, “You can use that to infer attributes from seemingly normal, harmless data,” she says. When one of these agentic AIs views a photograph posted online, which the poster might think contains no identifying information, the AI might nevertheless be able to infer a precise location.

Suddenly, a social media photo can be used to tell a malicious user exactly where you were when that photo was taken.

As an example of this, Li suggests asking ChatGPT “to search for your email address, and then ask it to try to get all the information related to that email address. I think you will be surprised by how much information it knows.”

The final understudied concern is direct attribute aggregation, which Li thinks is potentially the most dangerous due to its easy accessibility.

Direct attribute aggregation, according to Li’s position paper, radically “democratizes surveillance,” due to LLMs’ sheer ability to collect, synthesize and analyze “large volumes of online information.”

Even those without coding skills or other technical abilities can suddenly retrieve sensitive information, giving bad actors a hand when it comes to impersonation, cyberstalking or doxing — releasing private details onto the internet with the intent to harm.

Sharing your information

Some of the privacy dangers may now be unavoidable, Li says, and the risks extend beyond using LLMs directly. Any disclosure now made on the internet could lead to a breach of privacy.

“And very unfortunately, it also involves, maybe, how you made those disclosures in the past,” she continues.

Li notes that this is a bit of a pessimistic position to take, but hopes that, as people become more aware of the risks, they will be able to make better-informed decisions about the information they share online.