Northeastern professor leads open collaborative project to understand AI reasoning models

The new project takes advantage of inference tools being developed at NDIF to help AI researchers in the field gain a deeper understanding of how these systems take in input and produce output.

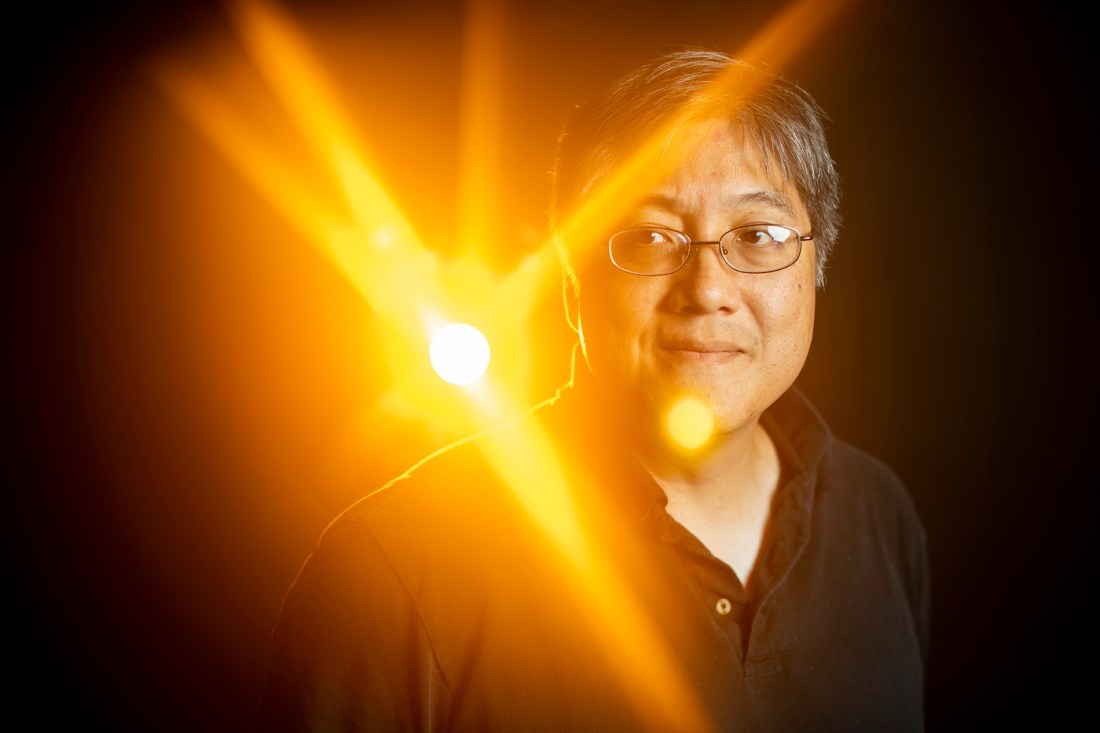

Northeastern University professor David Bau understands the value of collaboration to solve complex problems.

These days, one technology that is getting much attention from researchers is large language models (LLMs).

Bau, an assistant professor in the Khoury College of Computer Sciences, knows this well.

As principal investigator of the National Deep Inference Fabric (NDIF) at Northeastern University, Bau is trying to bring more transparency to the development of these systems and understand their “black box” components.

As part of his work at NDIF, Bau has just launched a new collaborative effort to study AI reasoning models — a new class of LLMs that can create long chains of deductions or thoughts, reasoning, or long internal monologues to solve complex problems — called the ARBOR Project. ARBOR stands for Analysis of Reasoning Behavior through Open Research.

The new multi-university project takes advantage of inference tools being developed at NDIF to help artificial intelligence researchers in the field gain a deeper understanding of how these systems take in input and produce output, Bau says.

The open research aspect of the program is key here, Bau explains. Anyone — from a university professor to researchers in industry — can contribute to the project.

The goal of ARBOR

Accessing it is as easy as visiting one of the various discussion boards linked on the group’s GitHub. A few discussions so far have focused on identifying deceptive behavior, reasoning trees and mechanisms of verifications and backtracking.

“The project is all about taking the reasoning models, cracking them open, understanding how reasoning works, identifying things that these reasoning models are doing that may be unspoken, things that go beyond human knowledge, understanding their internal calculations and making them transparent,” says Bau.

So far, more than 30 people have been involved in the project, and the discussions range from “multi-state and international collaborations” being conducted on video calls to more local conversations, Bau says.

The goal is for those conversations to turn into published research projects.

“Our perspective is that the problem is too immense to try to tackle in the old-fashioned way,” says Bau. “There are multiple research labs that are capable of answering some of the foundational questions here, but what would normally be done is that everybody would go and study these things separately.”

That often results in multiple papers being published that come to very similar conclusions, he says.

“With every new AI model, each of the labs would crack it open to look at the same basic things over and over again, a huge amount of duplication. It’s costly and slow.”

“Our theory that drives the ARBOR project is that you can make progress faster on a really, really important problem for humanity by having more transparency. It doesn’t mean that every single research project in the field is now going to be public. I think what it means is that a lot of the basic foundational questions — a lot of the basic infrastructure we need to be looking at to understand the scaffolding of these systems — will be appropriate to do collaboratively in public.”