Misinformation spread differently than most content on Facebook during the 2020 election, Northeastern research finds

Where did you hear that?!?

It was a common response in the 2020 election season amid an age of “fake news,” people’s trust in traditional media in decline, and social media virality.

New research from Northeastern University offers some answers — finding misinformation spread very differently than most content on Facebook during the 2020 election season, relying on gradual, peer-to-peer sharing from a smaller number of users in the wake of crackdowns on misinformation from Pages and Groups on the social media platform.

“We found that most content is spread via a big sharing event, it’s not like it trickles out,” David Lazer, university distinguished professor of political science and computer sciences, says. “For misinformation, it’s different. Misinformation — at least in 2020 — spread virally.”

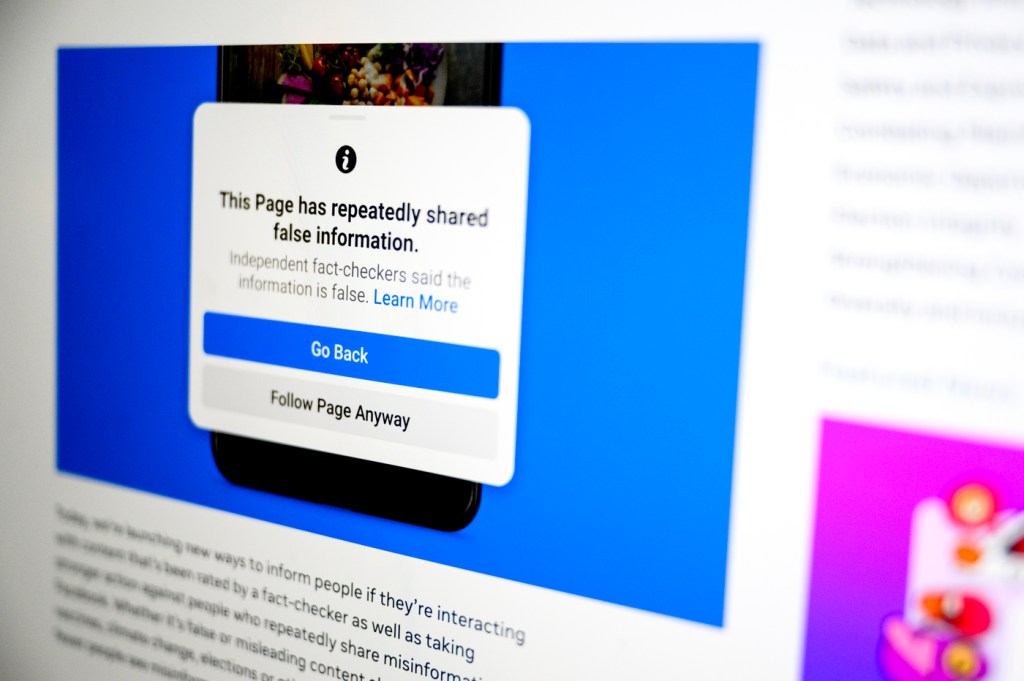

“Our inference is that this is likely because the content moderation policy by Facebook focused in 2020 on pages and groups,” Lazer continues.

Misinformation “abounded” throughout 2020, according to the Pew Research Center, with a frightening and novel global pandemic, a polarized electorate, a declining traditional media structure and foreign adversaries peddling misinformation. Several social media companies enacted policies to combat misinformation during this time, actions that intensified after the U.S. Capitol was overrun by a mob fueled by the lie of a stolen election.

To examine how such misinformation (distinguished as content labeled “false” by third-party fact checkers) spread on Facebook, Lazer and colleagues analyzed all posts shared at least once from summer 2020 through Feb. 1, 2021, on the social media platform and mapped out their distribution network.

The work was done as part of the Facebook/Instagram 2020 election project.

The research, published this week in the journal Sociological Science, reveals several interesting insights.

First, researchers found that most content on Facebook is spread via what Laser calls “a big bang” or “a big sharing events,” often on Facebook Pages and — to a lesser extent — through Facebook Groups. The distribution network that results is a “tree” that is initially very wide but relatively short.

For example, Lazer notes a post on the Taylor Swift Facebook Page that is initially shared with the page’s 80 million users and then maybe gets reshared a couple of times by individual users.

“The Taylor Swift tree would be like this giant burst, and then it would sort of trickle down,” Lazer says. “Most of that tree would show when Taylor Swift spread it, not when David Lazer spread it.”

But misinformation resulted in a different shaped tree, researchers found. These trees gradually expand as individuals share the misinformation, then a few of their friends share it, etc. This misinformation was spread primarily by only a few people — an estimated 1% of total Facebook users generated the most misinformation re-shares.

“That’s sort of the ‘slow burn’ kind of tree,” Lazer says. “The tree doesn’t have a big explosive sharing moment, but just continues to get shared and re-shared and re-shared and re-shared.”

Editor’s Picks

The researchers attribute these different growth patterns or “trees” to a couple factors.

First, Facebook allows Pages and Groups to have unlimited followers or members, respectively. Individual users, however, are limited to 5,000 friends.

Facebook also cracked down on misinformation on Pages and Groups while using individual users alone until May 2021.

“If the New York Times shared misinformation, then Facebook would suppress the visibility of the New York Times for a temporary period; but if I shared misinformation, nothing would happen,” Lazer says. “So, it really created a disincentive for pages to share content that might be considered misinformation, and that just meant it just left it to users to share and reshare.”

Such “break-the-glass” measures (measures employed only in times of emergency) “had pretty dramatic effects,” Lazer said.

“Facebook pressed many buttons, they pulled many switches, we can’t really say which one of them mattered,” Lazer says. “But it also seems unlikely that misinformation sharing plummeted to near zero right before the election — that’s not how misinformation works.”

The enforcement was not consistent, however, over the time period, researchers found — increasing around Election Day and after Jan. 6, 2021, but waning for periods of mid-to-late November and December.

As a result, Facebook’s crackdown on misinformation had mixed results, Lazer says.

“Users sharing misinformation really couldn’t use the most effective ways of sharing content, which is sharing content via pages — so in that sense, (Facebook’s actions) probably did reduce the sharing of misinformation,” Lazer says. “But it also shows that, if you plug one hole, the water comes out faster from another hole.”

As for whether that other hole was ever plugged, we’ll never know.

Recent access changes and algorithm alternations mean no equivalent study can be done on the recent 2024 election, Lazer notes.