What’s the history of artificial intelligence? New York Times chief data scientist explains the evolution of AI during Northeastern lecture

In the 1950s, right after the birth of modern computing, it was believed that artificial intelligence could only be made a reality if we could somehow crack the code behind the human mind and replicate it on a computer.

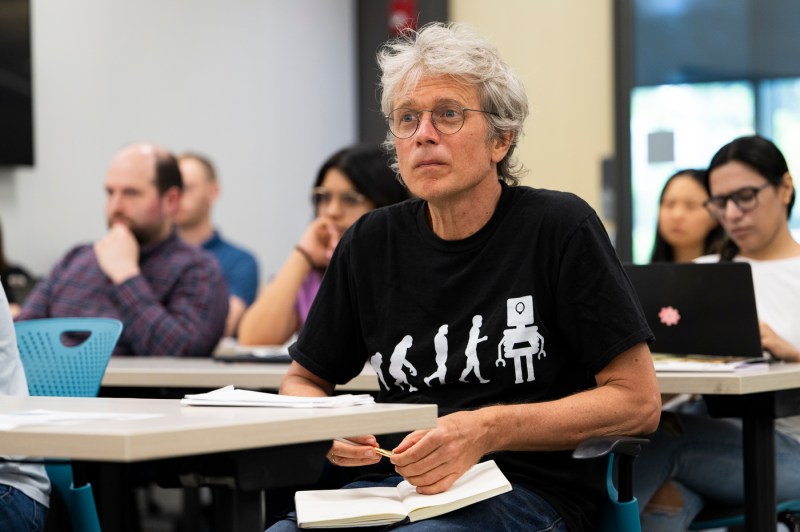

But that assumption was wrong, Chris Wiggins, the chief data scientist for the New York Times, explained Wednesday afternoon during a lecture at the EXP research center on Northeastern University’s Boston campus.

What AI researchers now understand is that artificial intelligence algorithms don’t need to work precisely like the human mind to function. They instead need large amounts of stored data that can be used for mathematical analysis and prediction.

“If you think about the uninterpretable world of large complex models, which are fed to us by datasets, it is clear that we are not solving artificial intelligence by understanding human intelligence so precisely that we put it into a computer,” said Wiggins, who was introduced by David Madigan, Northeastern’s provost and senior vice president for academic affairs.

“We don’t just program it,” Wiggins said. “Instead, we take abundant corpora of data and use mathematics to figure out how we can build function approximators that produce [new] data sets that look like your data set.”

The lecture was hosted by Northeastern’s Institute for Experiential AI and based on the 2023 book Wiggins co-authored, “How Data Happened: A History from the Age of Reason to the Age of Algorithms,” which examines the history of data science dating to 1700.

Why start the discussion in 1700?

That was when the word statistics was first used in the English language, Wiggins said. But it had a much different meaning back then.

“It had nothing to do with mathematics or even numbers,” he said. “It was specifically about statecraft and the science of understanding the state.”

But statistics was a moving target, and has gone through many evolutions in the centuries since. It has since moved from a “pencil and paper affair” to a computational one.

“Nowadays when we say the word statistics, we mean mostly the same thing that people meant in the 1950s, when they were talking about mathematical statistics,” he said. “It was a field that really came to be canonized by the time World War II happened.”

Innovations in computing hit warp speed during that war, specifically at Bletchley Park, in Bletchley, England, which was used for code breaking to intercept messages from enemies, Wiggins said.

These technologies were no longer solely being examined by academics at universities. They were being used in a conflict of war and led to widespread adoption of special purpose digital hardware, the creation of the military industrial complex, and what would become the field of artificial intelligence with research by scientists like Alan Turing, he said.

The term artificial intelligence was first coined by computer scientist John McCarthy in 1955 as part of a research project that explored how to make the technology a reality.

Over the next decades, collaborations between the military intelligence community and the private sector led to the development of a robust infrastructure to store large amounts of data. With access to such a rich amount of data, researchers were better equipped to develop more robust machine learning-based algorithms along with other data tools, Wiggins said.

Now, as AI hits the mainstream, concerns around bias, privacy and ethics have come to the forefront, he noted. Additionally, there are concerns around how people’s data is being used and the role governments, corporations and regular citizens play in how that data is accessed and analyzed.

So as the world increasingly becomes more digitized, should people be concerned about their data and privacy, asked Usama Fayyad, executive director of Northeastern’s Institute for Experiential AI.

Wiggins recognized the power imbalance at play, noting that data gives “capabilities and those capabilities are first available to those in power.”

“Right now, power is in the hands of people that have abundant data and technology,” he said. “And when I say people, I really mean private companies, so unchecked power is a concern.”

Editor’s Picks

On the privacy front, Wiggins also has some reservations. He said as of late these companies have been pretty good about keeping things secured, though he did highlight the recent leak of some high-level Google search documents.

“Many people effectively give all their data to a small number of companies,” Wiggins said. “And those companies have wisely chosen extremely talented information security officers who have done a pretty good job protecting our data. But you could imagine some pretty high-profile leaks that could really mess things up.”

As a third-year computer science student at Northeastern, Harrison Eckert said he found Wiggins’ lecture illustrative in helping him understand the history of AI.

“I think a lot of people have already heard about AI in the past few years, with these image generation models and text generation models,” he said. “It was really cool to be informed about the context and factors that led to those technologies.”

Ata Uslu, a fourth-year doctorate student studying network science, made note of how far we’ve come in our understanding of how AI works since the 1950s. But he’s curious to see the impact today’s research will have on future generations.

“What seemingly insignificant and negligible things that we’re doing right now will be talked about by future ‘Chrises’ that seemed insignificant back then but enabled this incredible potential?”