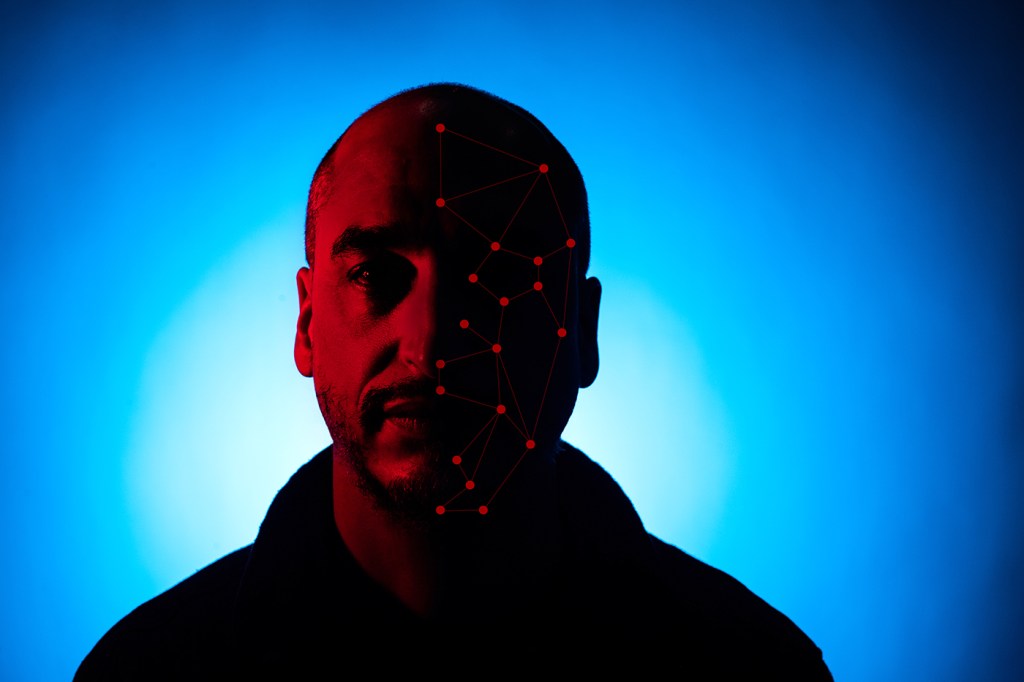

Humans are trying to take bias out of facial recognition programs. It’s not working–yet.

Relying entirely on a computer system’s identification, police in Detroit arrested Robert Julian-Borchak Williams in January of last year. Facial recognition software had matched his driver’s license photo to grainy security video footage from a watch store robbery. There was only one problem: A human eye could tell that it was not Williams in the footage.

What went wrong? Williams and the suspect are both Black, and facial recognition technology tends to make mistakes in identifying people of color.

Raymond Fu, associate professor with joint appointments in the College of Engineering and Khoury College of Computer Sciences at Northeastern University. Photo by Matthew Modoono/Northeastern University

One significant reason for that is likely the lack of diversity in the datasets that underpin computer vision, the field of artificial intelligence that trains computers to interact with the visual world. Researchers are working to remedy that by providing computer vision algorithms with datasets that represent all groups equally and fairly.

But even that process may be perpetuating biases, according to new research by Zaid Khan, a Ph.D. student in computer engineering at Northeastern University, and his advisor Raymond Fu, professor with the College of Engineering and the Khoury College of Computer Sciences at Northeastern University.

“The way that we’re testing fairness in algorithms doesn’t really ensure fairness for all,” Khan says. “It may only ensure fairness in the vague, stereotypical sense of fairness.”

What it comes down to, he explains, is how a computer vision algorithm learns to identify people of different races. Like other artificial intelligence systems, a computer vision algorithm is tasked with learning to identify patterns by studying a massive dataset in a process called machine learning. Every image in the dataset is given a label to identify the subject’s race. In theory, the programmers can then ensure adequate representation among all racial groups.

“People depend on these datasets to make sure that their algorithms are fair,” Khan says. So he decided to test the datasets himself. And he found that the racial labels themselves seem to encode the very biases they’re designed to avoid.

Khan wrote his own algorithms and used four different datasets that are considered benchmarks for fairness in the industry to train those computer vision systems to classify images of people according to race. He found that while the classifier algorithms developed using different datasets agreed strongly about the race of some individuals depicted, they also disagreed strongly about others.

A clear pattern emerged when the algorithms agreed: The people in the images appeared to fit racial stereotypes. For example, an algorithm was more likely to label an individual in an image as “white” if that person had blond hair.

“Most people aren’t stereotypes. People tend to be different from each other,” Khan says. “So unless you make these algorithms fair, come up with a more nuanced way of measuring fairness, ordinary people like me or you will be affected by this stuff.”

Part of the problem is that too many people have to fit into predefined racial categories—a point that Khan and Fu make in the title of their new paper, “One Label, One Billion Faces: Usage and Consistency of Racial Categories in Computer Vision,” posted to the arXiv pre-print server earlier this month. People who are categorized as Black, for example, can trace their origins to many different parts of the world, and can look quite different from one another. Furthermore, people often hold multiple racial identities simultaneously.

“We probably need a better system or more flexible system of categorization which is able to capture these sorts of nuances,” Khan says.

At stake is not just crime-fighting technologies, as in the case of Williams in Detroit. There are many ways that artificial intelligence algorithms are increasingly present in our society. Targeted advertising on social media relies on algorithms that learn from the data you share. Predictive text that appears when you’re composing an email uses artificial intelligence. Apple’s FaceID and similar biometric locks wouldn’t be possible without machine learning algorithms. And artificial intelligence is only expanding.

Bias isn’t just a problem with the datasets that power artificial intelligence.

“The story doesn’t start and end with the data. It’s the whole pipeline that matters,” says Tina Eliassi-Rad, a professor in the Khoury College of Computer Sciences at Northeastern University who was not involved in Khan’s study. “Even if your data was aspirational, you’re still going to have issues.”

When designing an algorithm, she explains, just as in the rest of life, you make decisions and those decisions have consequences. As such, programmers can embed their own values and worldviews into the algorithms that they write, and that can have messy results.

“Usually what is happening with these technologists is a group of usually males, who know nothing about society, sit in a room and design technology for society,” Eliassi-Rad says. “You have no business designing technology for society if you don’t know society.”

A diverse team developing the technology could help think through how it will operate in society more fully, she says, and it’s not just racial or gender diversity that is needed. People of different socioeconomic backgrounds who bring different life experiences to the table could help computer scientists think through the applications and implications of technology before it goes into practice.

Computer scientists also could be more honest and transparent about what their system does, Eliassi-Rad adds. Rather than marketing their programs as something for everyone to use in every possible application, perhaps a better approach would be to share with potential users details about what a system is specifically designed to achieve, why the technology is needed, who it is designed for, and even how it was created.

As more and more algorithms shape how we navigate our world today, concerns about how to keep technology from perpetuating biases abound. But Eliassi-Rad also says artificial intelligence could help shape a fairer future.

Perhaps, she suggests, machine learning algorithms could be used to ensure that laws in practice match the intent for which they were originally written. If a machine learning algorithm was studying police reports about the “stop-and-frisk” policy in New York City, for example, it might conclude that the intent of the policy was to stop and harass young men of color, Eliassi-Rad says.

“So perhaps you can use the data to just shed light on a bad policy or a bad execution of a policy,” she says. “And if you do that, then perhaps you would have better policies, better execution, better data.”

For media inquiries, please contact media@northeastern.edu.