Hate thrives on social media — but who should police it?

The violent riots at the Capitol were abetted and encouraged by posts on social media sites. But from a legal and practical standpoint, it’s often hard to hold social media companies responsible for their users, Northeastern professors say.

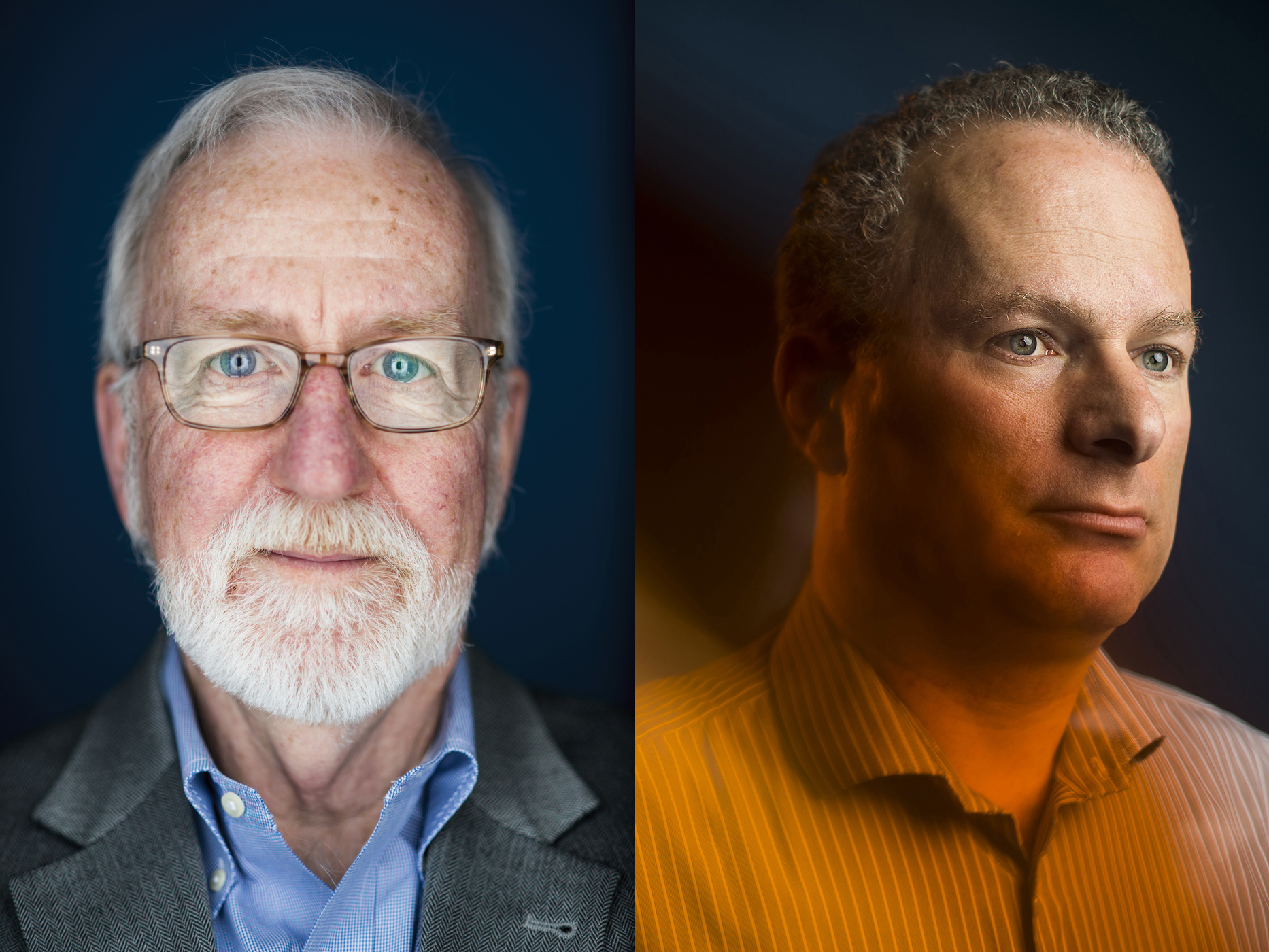

Jack McDevitt, director of the Institute on Race and Justice, argues that many of the posts amount to hate crimes—and that tech companies should be held responsible for violent rhetoric disseminated on their sites. But when it comes to spreading misinformation, exactly who is liable is less clear, says David Lazer, university distinguished professor of political science and computer and information sciences.

Left, Jack McDevitt, director of the Institute on Race and Justice at Northeastern. Right, David Lazer, university distinguished professor of political science and computer and information sciences Photos by Adam Glanzman/Northeastern University

After last week’s storming of the Capitol, a number of tech companies have banned President Donald Trump from their platforms for making unsubstantiated claims about the election. And several big tech companies cut ties with Parler, an app protesters used to spread violent rhetoric in advance of the riot. The moves have sparked a debate about the responsibility of tech companies to monitor hate speech and misinformation on their sites.

For hate speech, “of course they’re responsible,” says McDevitt, director of the Institute on Race and Justice at Northeastern. “Free speech only goes so far. We can pass legislation that limits these sites. We have the means to hold them accountable, and we should.”

Prosecuting individuals or companies for hate speech or incitement of violence are hard cases to make, especially when social media is involved, McDevitt says. “But that doesn’t mean we shouldn’t try. Most of the laws were written to deal with traditional hate crimes. We need to update these the way we did with internet bullying where we make it something people can be held accountable for.”

In the absence of legislation, private companies have taken action. Google and Apple have blocked Parler from their app stores, citing concerns that it could not adequately screen out material that incites violence following the chaos that erupted last week. And last week, Amazon suspended Parler from Amazon Web Services, one of the most widely used backend systems that provides internet infrastructure for many apps and sites. On Monday, Parler sued Amazon, asking the courts to reinstate its service.

Last week, Amazon suspended Parler from Amazon Web Services, one of the most widely used backend systems that provides internet infrastructure for many apps and sites. On Monday, Parler sued Amazon, asking the courts to reinstate its service. Photo by Ruby Wallau/Northeastern University

First Amendment advocates caution that some of those reactions could curb free speech, especially in the case of Amazon deplatforming Parler.

In a blog post on the subject, Northeastern journalism professor Dan Kennedy said that Amazon “has a responsibility to [respect] the free-speech rights of its clients that Twitter and Facebook do not,” seeing as the service is so foundational to so many sites.

“For AWS to cut off Parler would be like the phone company blocking all calls from a person or organization it deems dangerous. Yet there’s little doubt that Parler violated AWS’s acceptable-use policy,” he wrote.

Some have also suggested that punishing Parler for hate speech simply creates a demand for new alternative sites without regulations to crop up.

But, McDevitt says, punishing sites for hate speech is still a move in the right direction.

“We’ve done this with child pornography,” McDevitt says. “It doesn’t exist in the mainstream online or on social media. It’s moved to different places, absolutely. But it’s more difficult for people to find it, and that’s better than nothing.”

As for perpetuating false claims, Lazer, who studies the spread of misinformation on social media, is less sure who should be punished, if anyone—and more importantly, who should do the punishing.

“Having Mark and Jack make these decisions isn’t really a terrific process,” says Lazer, referring to Mark Zuckerberg and Jack Dorsey, the CEOs of Facebook and Twitter, respectively.

“It’s not democratic,” Lazer continues. “But on the other hand, I do not like the idea of government regulation. Would regulations made by appointees of Donald Trump do a better job monitoring misinformation? I’m skeptical.”

Lazer attributes the rise of alternative media sites such as Parler in part to the recent wave of speech filtering on mainstream platforms such as Instagram, Twitter, and Facebook.

In recent months, these sites started flagging posts that make claims rejecting the general consensus regarding the election and COVID-19, for example.

“In the U.S., you’ve seen the development of a parallel media system by the right to get content out,” Lazer says. Conservatives make up a majority of these alternative media ecosystems, according to his research.

For media inquiries, please contact media@northeastern.edu.