Northeastern researchers receive National Science Foundation grant to train robots to seamlessly pass objects back and forth with humans

A robot sorts fish in a processing plant, then passes them to a human worker for filleting. An astronaut floating in zero gravity exchanges tools with a robotic assistant. A robot hands a scalpel to a surgeon, freeing up a nurse for more complicated tasks.

In a future where humans and robots work side-by-side, the ability to pass tools and objects back and forth is vital. Neuroscientists and engineers at Northeastern are working together to make sure robots are up for the task, which is more complicated than it looks.

While we may not realize it, when we hand a glass of water to a friend or take our change from a cashier, our brains are evaluating every move the other person makes. We track their gaze, the orientation of their hand, and the velocity of their arm, and they monitor our movements just as closely.

“Basically, we’ve built predictive models of human behavior over the course of our entire lives,” says Mathew Yarossi, an associate research scientist at Northeastern with a background in biomedical engineering. “For us to hand objects back and forth, we barely have to take any cues from each other, and we can be incredibly predictive of where that object is going to be handed over, the orientation of that object, and things like that.”

And if we make a mistake and start to drop something, we can adjust on the fly to compensate and catch it, most of the time. But getting robots to do the same?

“We’re not there yet,” says Northeastern professor Eugene Tunik, who studies the brain mechanisms involved in human movement. “We’re not even close.”

Tunik and his colleagues recently received a grant from the National Science Foundation to study how humans coordinate hand-offs, and enable robots to make these same adjustments in real time.

“We want the robots to act more like a human collaborator,” says Tunik, who is a professor of Physical Therapy, Movement, and Rehabilitation Science and associate dean of research in the Bouvé College of Health Sciences. “To get robots and humans to be able to infer each other’s intent, and then act proactively to decipher that intent, and be able to seamlessly pass objects back and forth.”

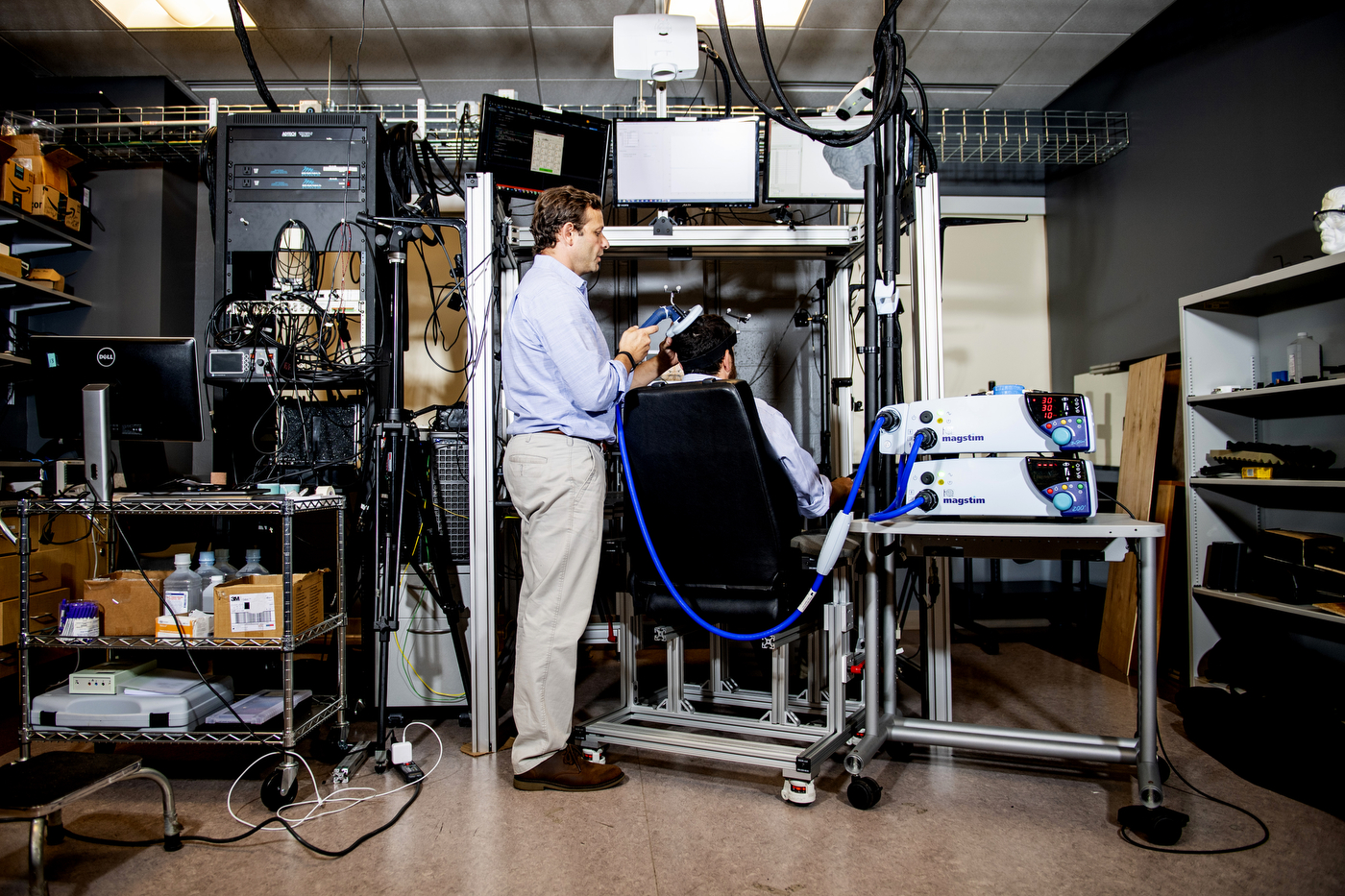

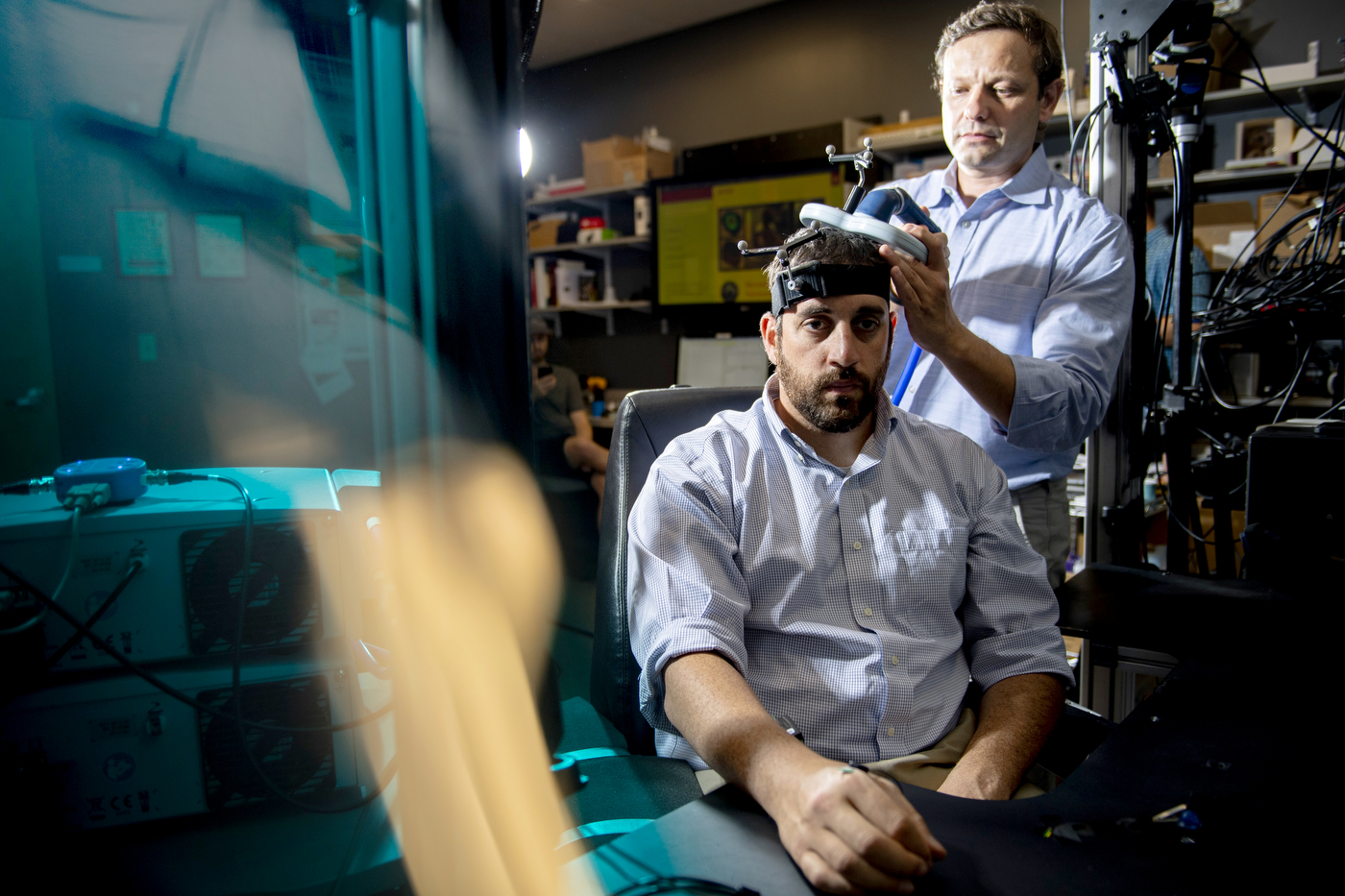

For robots to act like a human collaborator, they need to be able to interpret and adapt to human movements and also give cues that humans can easily follow. Tunik and Yarossi will be conducting a series of experiments to understand what humans do during an exchange with one another. That information will help to inform programming and design choices for robots.

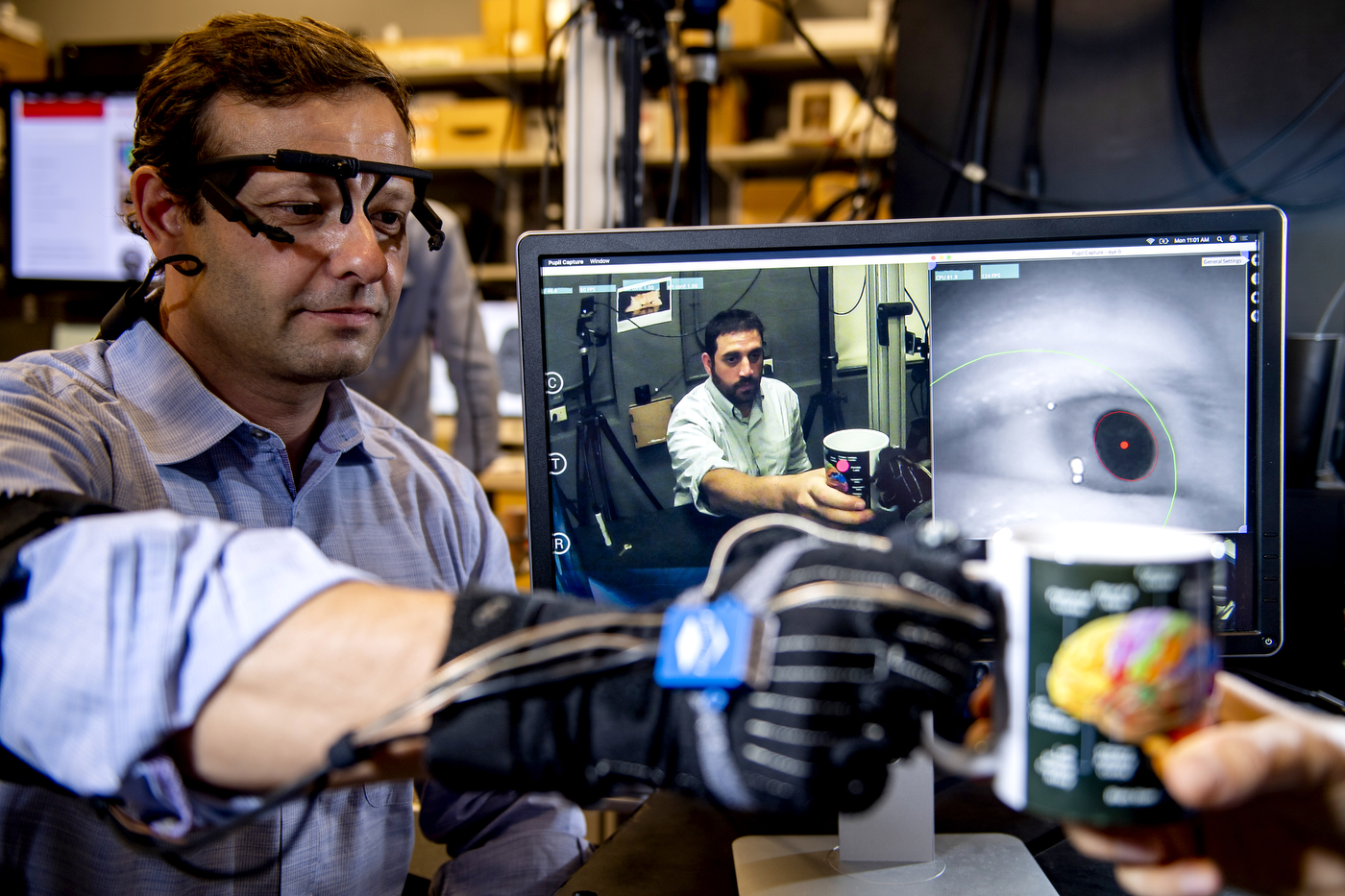

There are four roles that an individual might take during these interactions, Tunik says. The physical indications will vary depending on whether a person is giving or receiving the object, and whether they are initiating the exchange or following the other person’s lead. The researchers will be tracking eye gaze, brain signals, muscle activity, and physical motion of participants acting in all four of these roles with other humans.

“We’re extracting all these biophysical signals from the human, and we’re feeding them into the robot as information,” Tunik says.

That’s where the Northeastern engineers step in. Deniz Erdogmus, a professor of electrical and computer engineering, will be leading the effort to train robots to use that information to infer what a human intends to do and respond accordingly. Taskin Padir, an associate professor of electrical and computer engineering, will be focusing on making the robots move in ways that help humans understand their intentions as well.

Robots typically move like, well, robots. Their range of motion isn’t constrained by human-like joints, and they can move at a smooth, constant speed. Human movement is more of a parabola—we speed up as we start, and slow down as we approach the end of the movement. We may also include other small motions that indicate when we would like to give or receive an object or where that exchange is going to occur.

“If we can incorporate those subtle hints in the robot movements, then a human can have a better understanding of when a robot is ready to hand something over,” Padir says. “We think that will be a more natural interaction.”

The researchers will primarily be using Valkyrie, a humanoid robot developed by NASA with four-fingered hands, to test their algorithms and practice exchanges.

“Imagine Valkyrie passing a screwdriver or a drill to an astronaut making repairs, or vice versa,” Padir says. “Humans have a very smooth way of collaborating when it comes to these tasks. How do we capture that unspoken communication and instill it in a robot?”

For media inquiries, please contact Shannon Nargi at s.nargi@northeastern.edu or 617-373-5718.