Published on

How one philosopher is thinking about

the problem of deepfakes, AI and

the ‘arms race’ to rein in deception online.

The deepfake technology software market is a $73 billion industry. AI-based tools are trained on tens of thousands of actual human faces and can produce startlingly lifelike images of people.

Deepfakes and AI-generated images are ubiquitous, which means it’s getting increasingly difficult to sort fact from fiction.

According to one source, the deepfake technology software market is a $73 billion industry. AI-based tools such as Dall-E and Midjourney, which are trained on tens of thousands of actual human faces, can produce startlingly lifelike images of people.

Advances in AI image generation have precipitated a wave of deepfakes that have stirred controversy and menaced pop stars and celebrities such as Taylor Swift, Scarlett Johansson and Donald Trump. Explicit deepfakes of Swift prompted Elon Musk to block some searches of the singer on X recently, while deepfakes of politicians — including AI-generated voices in robocalls — threaten to undermine the democratic process.

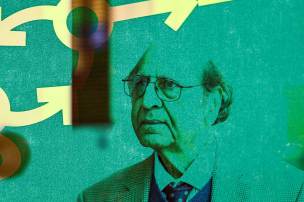

Don Fallis, professor in the Department of Philosophy and Religion and Khoury College of Computer Sciences at Northeastern University, has written about the challenges that deepfakes and AI systems pose to society writ large. Right now, he sees algorithmically-guided solutions to deepfaking, and the AI tools themselves, as locked in an “arms race.” In an information-rich world, he says this ratcheting up of AI tech poses a threat to human knowledge.

Northeastern Global News spoke with Fallis at length about the subject. His comments have been edited for brevity and clarity.

Tell us about your broader research interests, and what led you to your thinking about AI and deepfakes.

I do research in epistemology or the study of knowledge. In the last 15 or so years, I’ve gotten interested in looking at lying and deception, basically cases where people are trying to interfere with our knowledge and looking at exactly how that works.

As part of that larger project, I did this investigation of deepfakes to try to understand, epistemically, what was going on — exactly why they are a threat to knowledge. In what respect do they cause false beliefs, and in what respect do they prevent people from acquiring true beliefs? And even when people are able to acquire true beliefs, do they interfere with their acquiring justified beliefs, so that it’s not knowledge

We use videos as a way of accessing information about the world that we otherwise would be too far away from — either in time or in space — to access. Videos and photographs have this benefit of expanding the realm of the world that we can acquire knowledge about. But if people are easily able to make something that looks like it’s a genuine photograph or video, then that reduces the information flow that you can get through those sources. Now the obvious problem is, for example, that somebody fakes a video of some event, and the user is either misled into thinking that that event occurred, or led into skepticism about it because of the ubiquity of, say, deepfakes.

It seems to me that we develop these technologies and then they get used as a way of accessing and sharing information. And then once they are being used in that way, then there’s a motivation for people that have an interest in deceiving other people.

As deepfakes become more sophisticated, do you think we’ll be able to rein them in? What countermeasures currently exist?

What’s going on now is interesting. Essentially, AI is being used to create synthetic images, on the one hand, and then you have people deploying AI to create better ways to detect synthetic images, on the other. It seems like there’s going to be a continual arms race in that regard: as the software for utilizing the necessary generative AI was becoming easier to use and more widely disseminated, the probability that deepfakes will start becoming more and more common increases. The same thing could very well happen with detection software. And, of course, the people that are creating the deepfakes are not standing still — they’re trying to train their systems to become even more deceptive.

As online consumers of information, how do we play a role in the perpetuation and proliferation of deepfakes? What sorts of responsibilities do we have, as the problem of misinformation and disinformation gets worse?

As consumers, we have to consider things like, who is the source of this video, and whether the content is at all plausible given what one knows about the world, and so on. But you also have to ask, what can I as a consumer of information do to make sure that I’m not misled. If you’re going to be a critical consumer of information, then you have to be aware that people can lie to you; they can write false information. In essence: you can’t take videos and photographs as the gold standard, because they’re now just as susceptible to manipulation as anything else.

It strikes me that we, as a society, should also be thinking about what the powerful players, like these various social media platforms, can do to facilitate consumers being able to make these kinds of determinations on their own. Of course, this conversation has been ongoing. But presumably, it’s not going to be the case that all of us, as individuals, need to become experts on the latest methods of AI detection; we’re more likely heading towards a world where all of this is going to be going on in the background, so that all of us non-experts can be alerted to the presence of deepfakes as they appear.

Tanner Stening is a Northeastern Global News reporter. Email him at t.stening@northeastern.edu. Follow him on X/Twitter @tstening90.